Table of Contents

Sonar

More specifically, passive SONAR.

This page is half presentation, half semi-coherent conversation with myself as I work through the problem of passive sonar, going from theory to implementation.

Operational Theory

Sensor Setup

Let's suppose we have a pair of audio transducers aka microphones. They are setup as below (m1 for microphone 1). Let's call the distance they are apart ds.

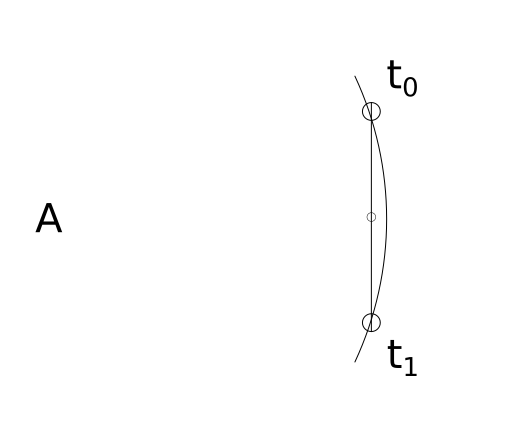

Perpendicular to Source

If this pair of microphones are perpendicular at their midpoint to a sound source (`A`), they will both hear/observe the sound source at the same moment. That is to say, if we noted the time when each microphone heard `A`, the difference in time would be `0`.

The curve represents the leading edge of the pressure wave (sound wave) emitted by sound source A. t0 represents the time in which the first microphone hears the sound and t1 represents the time when the second microphone hears the sound. If t1 - t0 = 0, the line formed between the sound source and the midpoint must be perpendicular to the line formed by the two microphones.

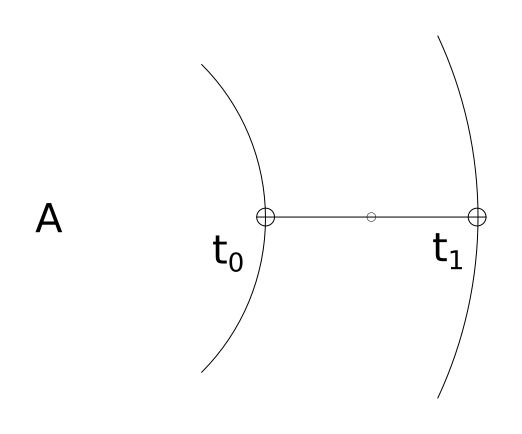

Colinear with Source

Suppose the pair of microphones have their midpoint (as well as themselves) colinear with sound source `A` as in the diagram below. The distance between the two microphones is known. That distance we have previously defined as ds. But this distance could also be determined by multiplying the time difference by the speed of sound1).

The key takeaway is that the time difference observed by the microphones gives the distance the sound wave traveled between the first microphone and the second microphone.

distance traveled by the wave between microphones = ( t1 - t0 ) * Ss

Thus if the distance traveled by the sound wave between m1 and m2 is 0, that means the sound source is perpendicular (at 90°) to the midpoint of the microphones

and

if the distance traveled by the sound wave between m1 and m2 is equal to ds, the distance of the microphones themselves, we know the sound source is colinear (at 0°) to microphones and their midpoint.

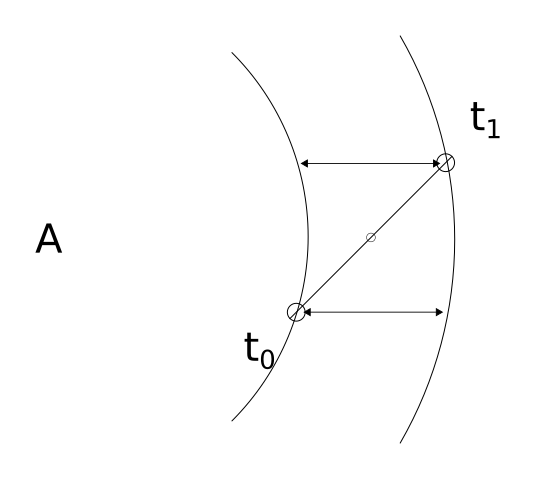

Somewhere between Colinear and Perpendicular

Because the distance the wave travels clearly varies relative to its angle from the midpoint, we know there must be some calculation to determine this angle.

Again, the distance the wave travels between m1 and m2 is determined by the time difference in observation. This distance is represented on the below diagram via the two lines with arrows.

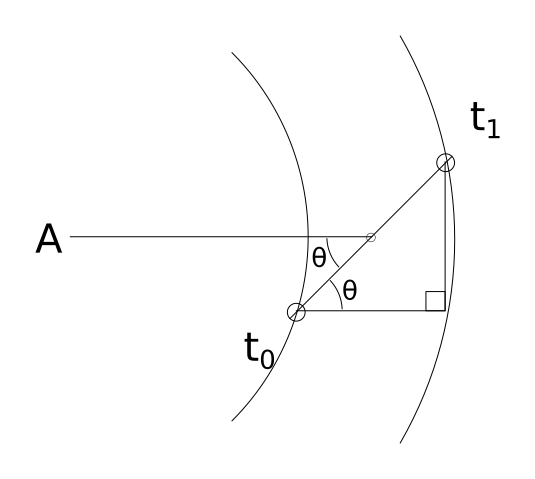

Forming a Right Triangle

We can use this information to draw a triangle. The hypotenuse of the triangle is ds, the known distance between our microphones. The bottom leg is the distance determined via (t1 - t0)*Ss. Because the bottom leg of our triangle is parallel to the line going from the micropone midpoint to the sound source, the bottom left angle denoted by θ must be the same angle that is formed between the line connecting the microphones and the line connecting the midpoint to the sound source. See the below diagram.

θ can be determine with a little trigonometry. Since cos(θ) = adjacent/hypotenuse, we know that θ = arccos(adjacent/hypotenuse).

θ = arccos( [ (t1 - t0)*Ss ] / ds )

A line that passes through the microphone midpoint, at an angle of θ must, at some point, pass through the sound source.

Triangulating

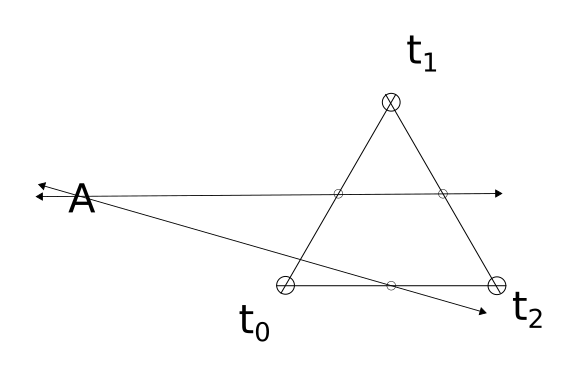

If a third microphone is added, we can repeat this whole process one more time with a different pair of microphones giving a second line pointing to the sound source. The point where these lines intersect is the location of the sound source. See below diagram.

Implementation

How does one turn the theory above into a working implementation?

Hardware

3x USB microphones mounted in an equilateral triangle configuration, connected to a computer (such as a raspberry pi) for signal processing.

Software

The software must monitor the signal from each microphone continually in 3μs rolling windows to attain 1mm resolution. This is not 1mm of accuracy to the source, but 1mm of accuracy for determining the distance the sound wave travels between the two microphones.

3μs gets 1mm resolution. The greater the distance the microphones are mounted apart, the greater resolution one can obtain. If the sensors are mounted 300mm apart, that means about 0.6 degrees of radial resolution.

At 3μs and sensors mounted 300mm apart, the sonar system can produce an angles from 0-180 in 0.6 degree increments.

A Fourier Transform is performed on each signal to isolate sounds and help connect each signal uniquely. The Fourier transforms allows signals below a certain amplitude to be ignored as well.

A simplified algorithm may work–one that only uses an amplitude threshold.

The math is pretty easy. Assuming we are using signed doubles, just take the average of the absolute value of each sample within the rolling sample window.

Taking Hardware Limitations into Consideration

Pretty standard sample rate is 44100hz. (44100hz)^-1 is 22.7μs. If we keep a sensor distance of 300mm, we can, at best detect distance in 9.9mm chunks. 9.9mm / 300mm * 180° = 5.9°.

So we would have an arc resolution of 5.9°. Call it 6°. That's not great, but for a screwing around project that only costs about $30 worth of hardware it may be good enough.

More Math

Once we have determined theta for two pairs of microphones, we need to find where the lines intersect.

Let x1,y1 be the first midpoint and x2,y2 be the second midpoint.

Assume the microphones are in an equilateral triangle configuration, the triangle's center of area is the origin point (0,0).

The top most microphone is on the y-axis.

The sound source is located at (xs,ys).

b1 is the y-intercept for the first line. b2 is the y-intercept for the second line.

tan(θ) will give the slope of a line, relative to the x-axis. However, when θ is determined, it is relative to the line formed by a pair of microphones. Thus, depending on which pair we are dealing with, θ must be adjusted.

If it is the bottom pair of microphones, no adjustment is needed.

If it is the left and top pair, use θ + 60.

If it is the top and right pair, use θ - 60.

Assume from here forward, θ? means an appropriately adjusted θ.

To determine b1:

b1 = y1 - tan(θ1) * x1

Repeat to determine b1.

Determine x1:

xs = ( b2 - b1 ) / ( tan(θ1) - tan(θ2) )

ys can be determined by substituting xs back into either of the previous equations.

ys = tan(θ1) * xs + b1

Code

Some very rough, very ugly, probably non-functional code.

This assumes a lot. But is based on everything written above this.

Will test and update once I get some hardware. Once I verify the code works (or rather, once I test it and fix all the bugs I find) I'll restructure the code out into more manageable functions.

- sonar.h

#define MACH 343 // speed of sound in m/s /*#define ds 0.3 // distance between sensors in m (should be in equilateral triangle shape)*/ #define ns_to_s(x) (x / 1000000000) // convert nanoseconds to seconds #define pi 3.14159265358979323846 // ... pi. Like the constant, y'know? /* relative positions of sensors */ #define TOP 0 #define LEFT 1 #define RIGHT 2 #define TOPLEFT 0 #define LEFTRIGHT 1 #define RIGHTTOP 2 typedef struct { double x; double y; } coord; typedef struct { int sensor_id[3]; PaStream *sensor_stream[2]; double ds; int16_t sensor_data[2]; int16_t threshold; } sonar_config; void sonar_init (void); void sonar_listdev (void); void sonar_getloc ( coord *loc, sonar_config conf ); int sonar_coord_cmp (coord a, coord b);

- sonar.c

#include <stdio.h> #include <stdlib.h> #include <stdint.h> #include <time.h> #include <math.h> #include <portaudio.h> #include "sonar.h" void sonar_init (void) { /* init portaudio */ PaError err; if ( (err = Pa_Initialize()) ) { printf ( "Failed to init: %s\n", Pa_GetErrorText ( err ) ); exit ( -1 ); } /* uh.. sure */ atexit((void (*)(void))Pa_Terminate); } void sonar_set_midpoints (coord *mps, double l) { /* determine the midpoints of a triangle of length l. * This assumes the triangle is equilateral and centered * on the origin point. */ double h_bigt; /* height of big triangle */ double r_bigt; /* radius of big triangle */ /* small triangle = triangle formed by midpoints of big triangle */ double r_smallt; /* radius of small triangle */ double l_smallt; /* length of side of small t */ double m; /* gives midpoint y coord. */ /* use pythagoras to determine height of equilateral triangle */ h_bigt = sqrt( pow(l, 2) - pow( l/2, 2) ); /* radius of an equilateral triangle = length of side / sqrt(3) */ r_bigt = l / sqrt(3); /* the difference in the height of bigt and its radius gives radius of smallt */ r_smallt = h_bigt - r_bigt; l_smallt = sqrt(3) * r_smallt; /* use pythagoras again */ m = sqrt( pow(r_smallt, 2) - pow( l_smallt / 2, 2) ); mps[TOPLEFT].x = -l_smallt / 2; mps[TOPLEFT].y = m; mps[RIGHTTOP].x = l_smallt / 2; mps[RIGHTTOP].y = m; mps[LEFTRIGHT].x = 0; mps[LEFTRIGHT].y = -r_smallt; } void sonar_listdev (void) { /* Figure out input devices we have */ PaDeviceIndex dev; const PaDeviceInfo *dev_info; for ( dev = 0; dev < Pa_GetDeviceCount(); dev++ ) { dev_info = Pa_GetDeviceInfo ( dev ); printf ( "Index: %d\n" "Name: %s\n" "Input Channels: %d\n" "Sample Rate: %f\n" "Default Low Input Latency: %f\n" "\n", dev, dev_info->name, dev_info->maxInputChannels, dev_info->defaultSampleRate, dev_info->defaultLowInputLatency ); } } int sonar_coord_cmp (coord a, coord b) { return (a.x == b.x && a.y == b.y); } void sonar_getloc ( coord *loc, sonar_config conf ) { unsigned int i; PaError err; coord midpoint[3]; coord mp_order[3]; coord mp_map[3][3]; sonar_set_midpoints (midpoint, conf.ds); mp_map[LEFT][RIGHT] = midpoint[LEFTRIGHT]; mp_map[RIGHT][LEFT] = midpoint[LEFTRIGHT]; mp_map[TOP][LEFT] = midpoint[TOPLEFT]; mp_map[LEFT][TOP] = midpoint[TOPLEFT]; mp_map[RIGHT][TOP] = midpoint[RIGHTTOP]; mp_map[TOP][RIGHT] = midpoint[RIGHTTOP]; PaStreamParameters sparams[2]; for ( i = 0; i < 3; i++ ) { sparams[i].device = conf.sensor_id[i]; sparams[i].channelCount = 1; sparams[i].sampleFormat = paInt16; sparams[i].suggestedLatency = 0; sparams[i].hostApiSpecificStreamInfo = NULL; } for ( i = 0; i < 3; i++ ) if ( (err = Pa_OpenStream ( &conf.sensor_stream[i], &sparams[i], NULL, 44100, paFramesPerBufferUnspecified, paNoFlag, NULL, NULL )) ) { printf ( "Failed to open stream for device %d: %s\n", conf.sensor_id[i], Pa_GetErrorText ( err ) ); exit ( -2 ); }; struct timespec t[3]; double theta[3]; double b[3]; int t_to_m[3]; int order = 0; for ( i = 0; i < 3; i++ ) t[i].tv_sec = 0; for ( i = 0; i < 3; i++ ) Pa_StartStream ( conf.sensor_stream[i] ); for (;;) { for ( i = 0; i < 3; i++ ) { err = Pa_ReadStream ( conf.sensor_stream[i], &conf.sensor_data[i], 1 ); if (! ( paInputOverflowed == err || err == paNoError ) ) { fprintf ( stderr, "Failed to read stream: %s\n", Pa_GetErrorText ( err ) ); exit ( -3 ); } } for ( i = 0; i <= 2; i++ ) { if ( abs( conf.sensor_data[i] ) > conf.threshold && t[i].tv_sec != 0 ) { clock_gettime ( CLOCK_REALTIME, &t[i] ); t_to_m[order++] = i; } } if ( order > 2 ) { order = 0; for ( i = 0; i < 3; i++ ) t[i].tv_sec = 0; /* * Find Thetas */ /* between t0 and t1 */ theta[0] = acos ( (ns_to_s(t[1].tv_nsec - t[0].tv_nsec) * MACH) / conf.ds ); /* between t1 and t2 */ theta[1] = acos ( (ns_to_s(t[2].tv_nsec - t[1].tv_nsec) * MACH) / conf.ds ); /* between t0 and t2 */ theta[2] = acos ( (ns_to_s(t[2].tv_nsec - t[0].tv_nsec) * MACH) / conf.ds ); /* * Map midpoints to sensors */ mp_order[0] = mp_map[t_to_m[0]][t_to_m[1]]; mp_order[1] = mp_map[t_to_m[1]][t_to_m[2]]; mp_order[2] = mp_map[t_to_m[0]][t_to_m[2]]; /* * Adjust thetas depending on midpoint */ for ( i = 0; i < 3; i++ ) { if ( sonar_coord_cmp ( mp_order[i], midpoint[TOPLEFT] ) ) { theta[i] += pi / 3; } else if ( sonar_coord_cmp ( mp_order[i], midpoint[RIGHTTOP] ) ) { theta[i] -= pi / 3; } } /* * Determine b[]s (y-intercepts) */ for ( i = 0; i < 3; i++ ) b[i] = mp_order[i].y - tan(theta[i]) * mp_order[i].x; /* * Determine X of loc (TODO: Average together all loc.x) */ loc->x = ( b[1] - b[0] ) / ( tan(theta[0]) - tan(theta[1]) ); /* * Determine Y of loc (TODO: Average together all loc.y) */ loc->y = tan(theta[0]) * loc->x + b[0]; } } }

- main.c

/* gcc -Wall -std=gnu11 -O2 -fno-aggressive-loop-optimizations -pedantic -lportaudio -lm *.c -o sonar */ #include <stdio.h> #include <stdlib.h> #include <unistd.h> #include "sonar.h" int main ( int argc, char **argv ) { int opt; int sensor_id[3] = {-1, -1, -1}; int verbose=0; sonar_config conf; conf.ds = 0; while ( (opt = getopt(argc, argv, "t:l:r:d:vs")) != -1 ) { switch (opt) { /* specify sensor */ case 't': case 'l': case 'r': /* not clever, just lazy */ if ( 1 != sscanf(optarg, "%d", &sensor_id[(opt == 't') ? 0 : (opt == 'l') ? 1 : 2]) ) { perror("Failed to parse -<t|l|r> argument"); exit(-1); } break; case 'd': /* specify ds (distance between sensors) */ conf.ds = strtod(optarg, NULL); if ( conf.ds > 343 ) { fprintf ( stderr, "Specified sensor distance is too large. Must be < 343\n" ); exit(-1); } if (conf.ds < 0.1) { fprintf ( stderr, "Specified sensor distance is too small. Must be > 0.1\n" ); exit(-1); } break; case 's': /* list audio input devices */ sonar_init(); sonar_listdev(); exit(0); break; case 'v': verbose = 1; break; default: //fprintf ( stderr, "Usage: %s -l | -s <sensor1> -s <sensor2> -s <sensor3> -d <distance in meters> \n", argv[0] ); exit(-1); } } /* done processing arguments, make sure arguments make sense */ if ( -1 == sensor_id[0] || -1 == sensor_id[1] || -1 == sensor_id[2] ) { fprintf ( stderr, "Error: 3 sensors, -t (top) -l (left) and -r (right) must be specified.\n" ); exit(-1); } if ( conf.ds == 0 ) { fprintf ( stderr, "Error: Sensor distance (-d) must be set.\n" ); exit(-1); } if ( sensor_id[0] == sensor_id[1] || sensor_id[0] == sensor_id[2] || sensor_id[1] == sensor_id[2] ) { fprintf ( stderr, "Error: Each sensor (-t,-l,-r) must be unique.\n" ); exit(-1); } if ( verbose ) { printf ( "Sensors: %d, %d, and %d\n" "Distance between sensors: %fm", sensor_id[0], sensor_id[1], sensor_id[2], conf.ds ); } /* init audio hardware */ sonar_init(); /* sonar_openstreams(sensor_id); sonar_ */ coord loc; for (;;) { sonar_getloc(&loc, conf); sleep(1); } return 0; }